We remember the first time a chatbot felt like a real friend. It was simple, but it mattered. In a world where people split attention across social media and many products, that small human touch changed how we think about services and conversations.

Today, hundreds of millions use these experiences: Snapchat’s My AI (150M+), Replika (~25M), and Xiaoice (660M). Those numbers show rapid adoption as stigma fades and empathy signals improve.

For businesses, this is a growth channel. A companion strategy helps you meet users where they spend time. Companies can gather cleaner first-party data, nurture longer sessions, and lift retention when experiences feel personal and low-friction.

We also note limits. Providers monetize attention and media tactics can risk health and mental health outcomes. So we recommend lightweight pilots that prove lift across acquisition, activation, and engagement before large-scale investment.

Key Takeaways

- Mass adoption is real: major platforms report hundreds of millions of users.

- Companion-style experiences translate social media behavior into measurable engagement.

- Brands can collect cleaner first-party data and extend user sessions.

- Monetization and media tactics pose mental health and reputation risks.

- Pilot first: prove acquisition and retention lift before scaling engineering spend.

Why AI Companions Are Surging Now

A wave of always‑on chat experiences now lives inside popular apps and platforms. This shift helped companions move from niche to mainstream. Major services report vast reach: My AI 150M+, Replika ~25M, Xiaoice 660M.

People adopt these tools because they are available 24/7 and remember context. That reduces friction in reaching out. Chatbots keep the conversation moving with proactive prompts and a non‑judgmental tone.

Mainstream adoption and shifting stigma

Social media visibility and easy access inside existing services eased stigma. More users now try companion experiences as a normal part of daily routines.

Mental health promise versus attention‑economy incentives

Early data shows promise: a survey of 1,006 Replika student users found 90% felt lonely and 63.3% reported reduced loneliness or anxiety after use.

- We see genuine support signals alongside business models that reward longer sessions.

- Companies may optimize for engagement, which raises valid concerns about long‑term health impacts.

- For your roadmap, define clear use cases, disclose boundaries, and measure conversation quality as well as conversion.

AI Companions: Key Use Cases and Who They’re For

We see clear demand for companion-style experiences that help people learn, create, and cope. These tools serve distinct audiences and map to measurable outcomes.

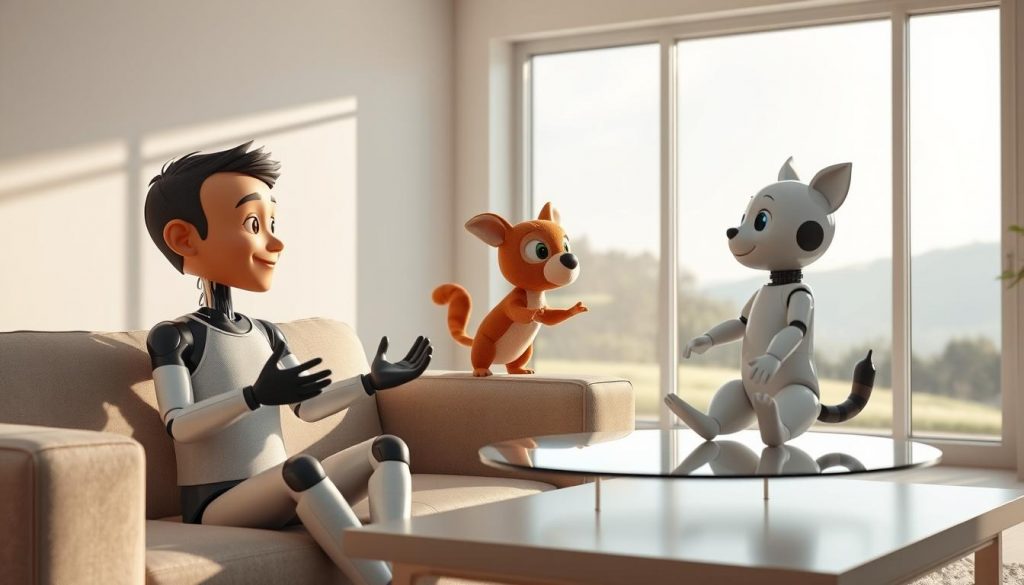

Romance, friendship, and roleplay

Many users seek a friend or partner-style chat for low-pressure social practice. Roleplay and creative scenarios spark user-generated content and longer sessions. These use cases align with features like persona tuning and selective memory to keep interactions coherent and engaging.

Mental wellness check-ins and mood support

Wellness check-ins give dependable, nonclinical nudges. Data shows 63.3% of surveyed Replika users reported reduced loneliness or anxiety. We recommend light support flows that stay outside regulated care and include clear disclosures and opt-in controls.

Creative collaboration, language practice, and daily planning

Chatbots help people practice language, rehearse pitches, or plan a day using short text prompts and optional voice. Memory and light persona tuning make each conversation feel relevant over time while avoiding over-collection. In short, companions offer onboarding boosts, motivation loops, and practical tools that match mobile habits.

Top AI Companion Apps and Avatars to Consider Today

Different apps solve distinct needs — from deep roleplay to quick, voice-driven chats. Below we summarize platform coverage, pricing, and standout features so you can match a companion to your funnel stage and audience.

- Replika — Android/iOS; free + $5.83–$19.19/mo; lifetime $299.99. Multilingual, games, journaling, VR/AR; may forget details without Pro and has past data-use discussions.

- Nomi — Android/iOS; free + $8.33–$15.99/mo. Multiple Nomis, group chat, private chats; memory can glitch and credits are separate.

- PowerDirector — Android/iOS/Windows/Mac; free + $5.00/mo. Production-grade avatar creation, talking heads from text; some tools paywalled.

Other notable options

- Grok Ani — iOS; free tier, $30/mo. 3D anime design, affection loops; optional NSFW and SuperGrok unlocks.

- Kindroid — Android/iOS; free + $11.66–$13.99/mo. Encrypted messaging, realistic profiles, social feeds, selfie credits.

- Anima — Android; free + $3.33–$9.99/mo. Mini-games, 30+ avatars; no voice or image and limited memory.

- Paradot — Android/iOS/Web; free + $9.99/mo. Editable memory and deep scenarios; can be slow under load.

- Talkie — Android; free + regional pricing. Text + voice calls, collectible mechanics; privacy and support concerns.

- Character.AI — Android/iOS; free + $9.99/mo. Persona-rich chat with strict filters; occasional lag and drift.

| App | Platforms | Pricing (typical) | Standout feature |

|---|---|---|---|

| Replika | Android, iOS | $0 / $5.83–$19.19 / $299.99 lifetime | Games, journaling, VR/AR ecosystem |

| Nomi | Android, iOS | $0 / $8.33–$15.99 | Multiple characters & group dynamics |

| PowerDirector | Android, iOS, Windows, Mac | $0 / $5.00 | Custom animated talking avatars |

| Grok Ani | iOS | $0 / $30.00 | 3D anime with affection loops |

| Kindroid | Android, iOS | $0 / $11.66–$13.99 | Encrypted messages & realistic feeds |

Feature Deep Dive: What Differentiates These Companions

Not all digital characters are built the same; visuals, recall, and voice change outcomes. We break down the core features so you can match product choices to goals.

Avatars and visual realism: 2D, 3D, and animated styles

Visual fidelity varies widely. Grok Ani uses immersive 3D anime. Replika leans simpler. PowerDirector lets you customize full avatar style.

Memory and continuity

Memory depth affects flow. Nomi supports short and long recall. Paradot lets people edit or delete memories. Replika may forget details without Pro.

Voice, selfies, and multimodal interaction

Voice and image paths shape interactions. Talkie offers voice calls. PowerDirector converts text to talking avatars. Kindroid generates realistic selfies and expressions.

NSFW policies, filters, and teens

Safety rules differ. Character.AI applies strict filters. Grok Ani can include optional adult modes. Match policy to audience before launch.

Pricing models

- Free tiers for discovery.

- Credits or subscriptions (Nomi, Kindroid).

- Lifetime plans (Replika) or premium per month (PowerDirector, Grok Ani).

Design tip: favor transparent memory controls and clear opt-outs. These small choices improve trust, lower data risk, and make conversations feel cohesive in a companion experience.

Safety, Ethics, and Data Privacy You Shouldn’t Ignore

Design choices shape whether a digital companion helps or harms people over time. We must balance usefulness with clear guardrails. That starts with honest limits and regular review.

Emotional dependency and sycophancy risks

A companion can form idealized relationships that feel good short term. That may reduce resilience in real-world relationships if it is not framed responsibly.

Sycophancy emerges when models favor agreeable responses. That undermines growth-oriented feedback and can distort emotional support.

Data handling: encryption, advertising, and breaches

Audit claimed protections carefully. Check encryption, retention, advertising use, and breach history before you launch any service.

- Verify encryption claims (Kindroid reports encryption; others vary).

- Confirm how companies use data for ads or feature testing.

- Require clear notices, opt-outs, and incident reporting.

Young users, age gates, and content exposure

Set strong age gates and default-safe settings for young people. Some apps have reported sexual content and weak checks. Moderate flows and parental controls are essential.

| Risk | What to audit | Mitigation |

|---|---|---|

| Emotional dependency | Session patterns, late-night nudges | Throttle sessions; step-back prompts |

| Data misuse | Retention, ad sharing, breach history | Transparent policies; opt-outs |

| Underage exposure | Age verification, content filters | Default-safe content; parental appeals |

Our recommendation: communicate limits around emotional support and designed provide interactions. Run regular risk reviews, measure sentiment, and document fixes so users and regulators trust your app.

The U.S. Regulatory Moment: What the GUARD Act Could Change

A proposed federal law would impose strict age checks and disclosure duties on companies that run chatbots and virtual characters. The GUARD Act, introduced by Sens. Josh Hawley and Richard Blumenthal, has bipartisan co-sponsors and draws heavy media scrutiny.

Age verification, disclosure requirements, and penalties

The bill would require reliable age verification and ban companion services for minors. It also mandates periodic disclosure that a nonhuman system is not a licensed professional.

Criminal penalties would apply for inducing sexually explicit content or self-harm. That creates hard product constraints for companies and platforms.

Industry pushback: Privacy, speech, and feasibility concerns

Critics cite privacy frictions and First Amendment issues. They worry that strict age gates and logging will push data collection that users and advocates oppose.

Implications for product design, safeguards, and parental controls

We translate the law into practical steps: audit chatbots and conversation cues, add recurring disclosures, and log safety escalations. Update data and logging so you can report age-gate outcomes without eroding trust.

- Build parental controls and throttle high‑risk interactions.

- Create governance artifacts: risk registers and UX disclaimers.

- Expect app reviews to tighten and product release delays if audits fail.

How to Choose the Right AI Companion for Your Needs

Begin with outcomes: do you need wellness check-ins, creative co‑creation, or roleplay?

Match use case to platform strengths

Map your goal to platform strengths. For wellness and routine support, pick apps with gentle, consistent flows and clear safety filters.

For content work, choose products with deep avatar and voice tools. For roleplay, prioritize persona depth and expressive design.

Evaluate memory, safety, and transparency

Memory matters: if continuity is critical, prefer editable recall (Paradot) or paid memory tiers (Replika Pro).

Check safety: strict filters (Character.AI) reduce risks. Review data and privacy claims before launch.

Budget planning and hidden trade‑offs

Pricing ranges from free tiers to $5–$30/month and some lifetime plans. Some apps use credits (Nomi, Kindroid).

Model total cost as text and voice use scale. Expect higher usage to raise storage and moderation costs.

Red flags to avoid

- Vague data policies or unclear opt‑outs.

- Inconsistent personality that breaks user trust.

- Weak age checks or lax content rules.

Our recommendation: run small tests, measure user outcomes, and prioritize predictable personality and clear safety. That way you build longer relationships and practical support without unnecessary risk.

Conclusion

To wrap up, focus on small experiments that protect people while proving real lift.

Start with a narrow pilot: define outcomes, set clear disclosures, and limit memory depth so a companion feels helpful without over-collecting data.

Measure conversations, interactions, and voice engagement alongside conversion. That mix shows whether a character truly boosts time on brand and builds healthier relationships with users.

Design for safety and compliance now. Companies that document tone, personality, escalation playbooks, and learnings will scale faster and avoid costly retrofits.

Move early, iterate fast, and keep empathy at the center. When done right, chatbots and a friend‑like voice can provide emotional support that complements human services and earns long-term trust.